An annoying part about getting older is that you realize how cyclical things are and that much of life is the same hustles and hassles with new names and labels. Whatever your thoughts about cryptocurrency (I'm a skeptic but also own a small amount), it's indisputable the sector is rife with scams, rugpulls, and unregulated securities. As a result, billions were lost in crypto hacks and scams over the last two years (see table below). This isn’t counting the firms like FTX, Celsius, and Voyager that went under, wiping out an additional 200 billion dollars from retail investors and people who trusted the shadow banks called crypto exchanges.

The Securities and Exchange Commission (SEC) finally woke up from its multi-decade slumber this month and issued massive fines against several crypto exchanges and the influencers shilling for them. Retired NBA star Paul Pierce was fined 1.4 million dollars for promoting a token called Ethereum Max. EMax was a pump & dump scheme where new investors were served up as exit-liquidity for the founders. EMax currently trades at $0.00000000099 per worthless token. Kim Kardashian was fined a similar amount for touting the same token in December. Regulators have stablecoins in their sights. Crypto exchange Kraken agreed to a 30 million dollar fine and agreed to shut down their staking program (if you don’t know what crypto staking is, you’re probably better off that way). Kraken’s rival Binance is expecting a massive fine as well.

2022 was a record year for hackers in crypto - Source: Decrypt, data from Chainalysis

The handwriting was on the wall for years about crypto scams but federal authorities waited over a decade before acting. In the interim millions of people were harmed. A regulatory framework for crypto exists and has existed since before the first token came to market. We can't afford to wait fourteen or even four years for the government to set the ground rules for AI. The potential for society wide harm is incalculably larger.

After-the-fact debates are biased toward the expansion, rather than limitation, of a practice. In 2016, the city of Dallas had to reckon with the question of whether they would allow police to kill a barricaded shooter with a remote operated drone. They decided "yes" this is an acceptable use of force. Unfortunately, they decided it retroactively, months after the police had killed their target (with a Remotec Androx Mark V A-1, manufactured by Northrup Grumman). The police department refused to release documents related to the decision to use the robot and the city absolved the chief and the entire chain of command after the incident. The police chief, David Brown, now runs the police department in Chicago and there’s now a precedent regarding the use remote operated robots to kill people.

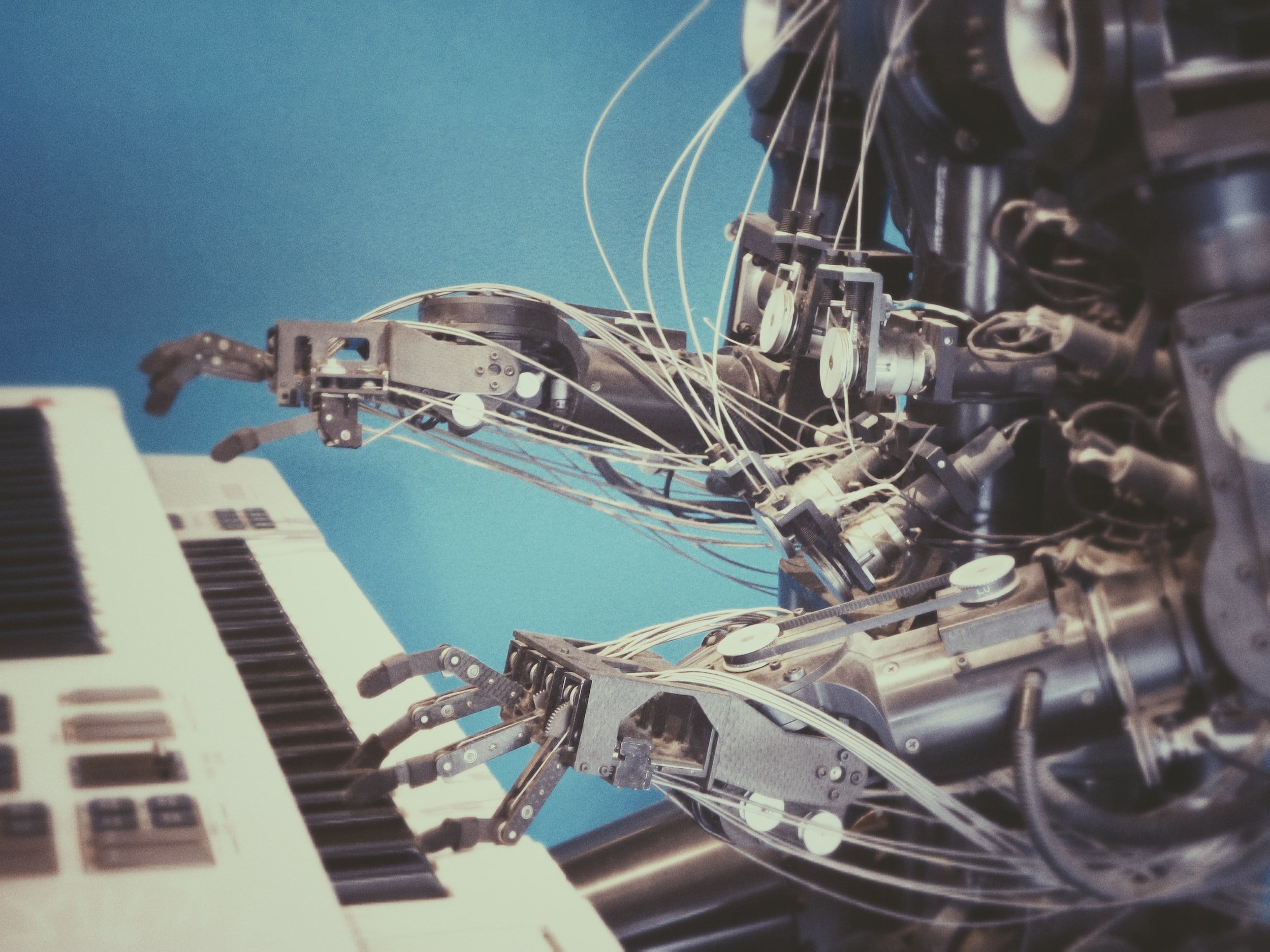

As venture capital abandons crypto projects, opting to fund cowboy AI projects, policymakers can't be passive. The potential harm from AI in journalism, financial markets, deep-fake aided scams, and law enforcement use of force have the potential to do far more damage than the crypto bros. Letting the DARPA and Boston Dynamic chips (see video) fall where they may is societal malpractice. If my concerns here seem alarmist to you, imagine trying to explain in-flight wifi to someone in 1994.

We can't wait to make the decision about the limits we will put on the use of AI until after they’re deployed. If you don't think there's people in law enforcement salivating to deploy AI robots and drones in low-income and Black neighborhoods, you don't know American history.